Great EETimes.Com news story!

Check this out:

Jerry Jones

Check this out:

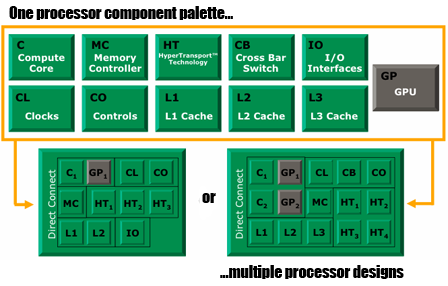

Aiming to leapfrog archrival Intel Corp., Advanced Micro Devices will deliver a wide range of merged x86 CPUs with on-board graphics accelerators starting in late 2008. AMD announced its so-called Fusion program Wednesday (Oct. 25) upon the formal completion of its $5.4 billion acquisition of graphics and chip set designer ATI Technologies Inc. The merged company will ship versions of the combined processors for laptops, desktops, workstations, servers and consumer electronics devices geared for emerging markets.

.

.

Comment